Introduction – AWS ECS

As many of us already familiar with rising of microservices and we know that architecting applications around microservices is focus point with developers right now, but running them at scale in a cost-effective manner while maintaining high availability can be a real challenge.

Although Docker solves some problems and it ease development and deployment process, however, running and managing many of Docker containers at large scale is hard and it even harder when you want to achieve resiliency and auto-scaling of your components dynamically as per the need.

Amazon introduced EC2 Container Service (ECS) in 2015 as a reaction to the rapidly growing popularity of Docker containers and microservices architecture. The ECS architecture allows delivering a highly scalable, highly available, low latency container management service.

ECS is an alternative to tools such as Kubernetes, Docker Swarm, or Mesos with Marathon. So, if someone would ask me to define ECS in one line, I would say that “ECS manages and deploys Docker Containers.” That’s really it. I could elaborate several other tasks it does, but it does all of those things to manage and deploy Docker Containers.

As per my experience with ECS so far, it is quite hard to cover in depth of ECS in a single post, so I will try to touch base all high-level important components of ECS.

Core components of ECS

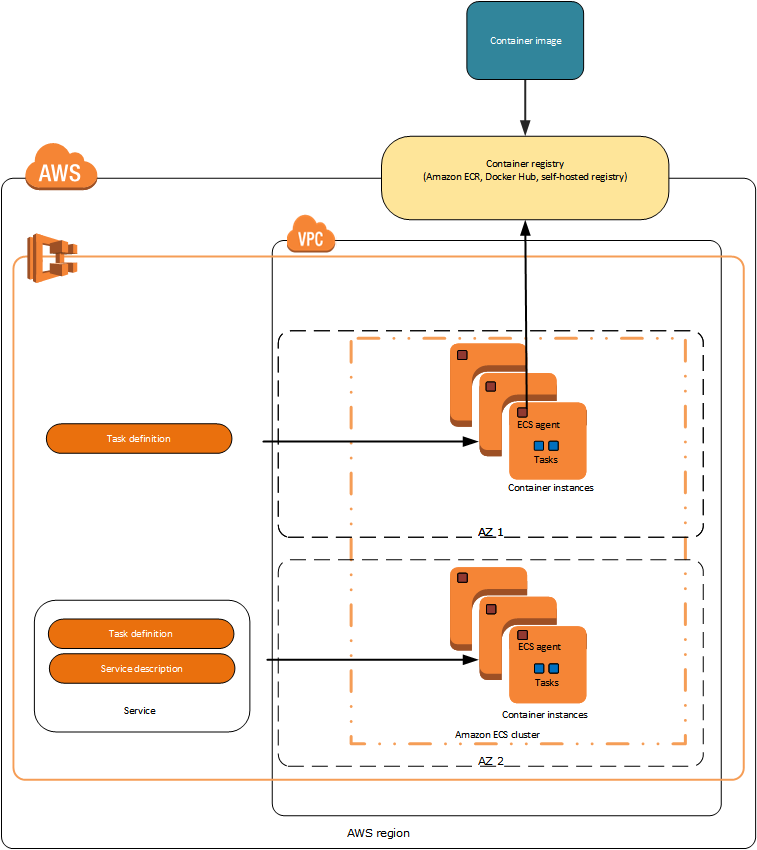

1) Cluster, Container Agent, and Container Instances

The cluster has a name and it is a group of EC2 instances and every EC2 instance has ECS Container Agent on them. Let’s try to compare this arrangement with some real-world objects.

The Cluster is a hotel and it has a name, e.g.: “myapp-services-cluster”. EC2 is a multi-sharing room in this hotel and this room has some camera to observe peoples in the room. The camera is doing similar to one of the responsibilities of ECS Container Agent and which is observing the Docker containers. People in the room represents docker containers (Tasks).

These EC2 instances use ECS Optimized AMI, which has following attributes:

- The latest version of the Amazon Linux AMI.

- The latest version of the ECS Container Agent.

- The compatible Docker version for ECS Container Agent.

Each EC2 instance is termed as Container Instance and it must know the cluster name on this it will be attached. If we are using ECS Optimized AMI, we need to set “ECS_CLUSTER= myapp-services-cluster” and already installed container agent will attach this instance to cluster automatically.

So, yeah that the cluster all about, a bunch of EC2 instances with container agent pointing to same cluster name. Usually, we set up Cluster as an Auto Scaling Group, which can change its capacity as per load.

2) Task Definition

Task definition is a group of the specifications which defines all the required criteria to set up containers on container instance. We can also define task definition as a blueprint for running instance of the application. Usually, common things to configure are following:

- Docker image/s name

- The CPU, memory, port mappings, entry points etc. required for provided docker image

- Environments variables

- Log configuration

So, if you’ve made your hands dirty with Docker, most probably you know about these properties. Most of the options we pass to our “Task Definition” about Containers are the options we can pass to Docker when creating Containers.

Just to remind, Task Definitions are just a bunch of instructions. They’re independent of Clusters. Meaning that we can use them in any Cluster, but we need someone to run it and here Services come into the picture.

3) Service

So far, we have explored Cluster and Task Definition, but we need someone to run the Task Definition on this cluster and Service does it for us. Creating a Service tells our Cluster to go beyond just running tasks – it manages and monitors them. It is one of the most important part of ECS. When we handover a Task Definition to the Service, it does a number of things for us.

- Initialize Tasks from Task Definitions on the best-suited Container Instances in Cluster. It also supports selecting a Task Placement strategy, the options of things like AZ Balanced Spread, Bin Pack, etc.

- Monitors the Tasks and reports back metrics. Usually, we use service CPU and Memory utilization metrics. It became quite useful when we integrate it with could watch to perform a custom action like dynamic scaling etc.

- Ensure to keeps the number of Tasks we specify always up and running. If any task down due to some runtime error, initialize a new one. We can also set a maximum percent of Tasks and a minimum healthy percent of Tasks. These percentages represent the number range of Tasks that our Service can have at any given time. They’re used during new Task Definitions updates. For example, we might update our Task Definition to use an updated Docker Image for the Containers.

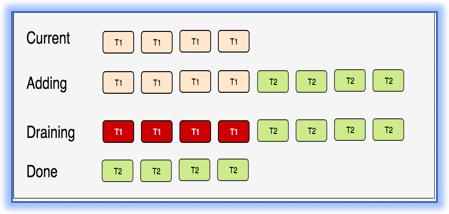

- Updates Tasks by handing them an updated Task Definition and drain out old running Tasks without losing any current on-going request. By deploying new Tasks incrementally and taking down old Tasks incrementally. It uses the maximum percent and minimum healthy percent of Tasks parameters to achieve this. Let’s take a small example to understand this concept.For example, we set up service with following arguments:

- Number of Tasks: 4

- Minimum Healthy Percentage: 50%

- Maximum Percentage: 200%

Now if old Task Definition was T1 and we push new Task Definition T2, service will deploy this changes in below manner. The RED icons represent removed tasks.

ECS task update As we have maximum healthy percentage is 200%, service first added 4 new Tasks in the second step (total 8 Tasks = 200%) and then draining old ones in the third step. But in above example, the minimum healthy percentage has not reflected. Let’s take another example to understand it and update maximum percentage to 100%.

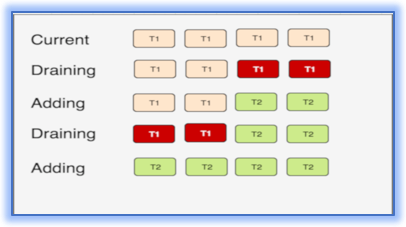

- Number of Tasks: 4

- Minimum Healthy Percentage: 50%

- Maximum Percentage: 100%

And now due to the limitation of maximum percentage to 100%, no more than 4 tasks could run under this service at any given time and minimum 2 healthy tasks should always run and it reflects in the second line of draining where two tasks of T1 removed and replaced by T2 in the second step and then in third remaining two Tasks of T1 also removed and replaced again by T2 in the fourth step.

- Optionally scales out/in our Tasks based on traffic/events, aka Service Auto Scaling. Service can automatically add/remove tasks to the Cluster and it flows the usual AWS workflow.

- Select a metric to watch in CloudWatch for Service

- Configure a CloudWatch alarm to trigger when the metric breaks thresholds

- Take action on the CloudWatch alarm

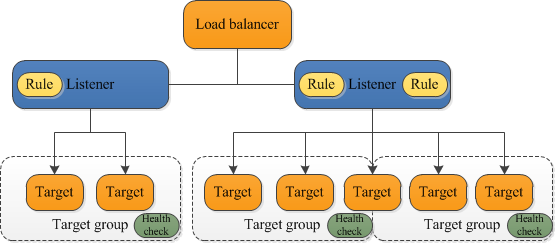

4) Application Load Balancer (ALB)

This component of ECS responsible to distribute incoming traffic evenly to Tasks. It accepts incoming traffic on predefined protocol and port and forwards it to Target Groups based on the route. In ECS, Target Group is where Service registers Tasks and once registered, ALB evenly routes traffic on them. A single ALB can be used by different service and that’s why Target Group sitting as a Task grouping between Service and ALB.

If you’ve worked with Elastic Load Balancers, this should point out the differences between Classic and Application. Classic Load Balancers spread traffic between servers. Application Load Balancers spread traffic between applications. In this case, the applications are our ECS Tasks.

ALB has a few primary components:

- Target Groups, a group of applications, in our case Tasks, that will receive load balanced traffic.

- Listeners, what ports and protocols are configured for ALB to listen on, i.e. Port 80, Protocol HTTP

- Listener Rules, when traffic arrives on a particular Listener, forward it to a particular Target Group.

Nice and clear explanation about ECS Service.

Thank you very much..!!!